How to use Nutanix AHV VM Guest pre and post scripts with SnapShots and Veeam Backups (SAP HANA)

Lately we had some issues with setting up a lab environment for SAP SnapShot based processing with the usage of Nutanix AHV VM Guest pre and post scripting. Here is the complete guide to make them work:

- Build the VM with SCSI disks.

- Add an IP address and make sure AHV Virtual Cluster IP can communicate with the VM on TCP 2074

- Many Linux systems need additionally the dmidecode software package. You can install it for example with

yum -y install dmidecode - Install Nutanix NGT Tools

Go to Prism and select the VM

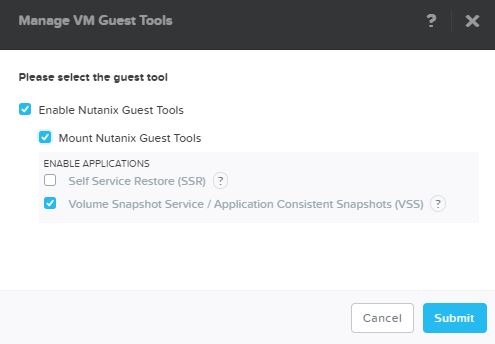

Klick on “Manage Guest Tools”, enable it and check “Mount Nutanix Guest Tools”

Login to the VM and run:

sudo mount /dev/sr0 /mnt

sudo /mnt/installer/linux/install_ngt.py

- Create the pre_freeze and post_thaw script/usr/local/sbin/pre_freeze

/usr/local/sbin/post_thaw (both need to exist)

On older AHV versions it needs to be

/sbin/pre_freeze

/sbin/post_thaw (both need to exist)

You can use any sh or python script.

For example

#!/bin/sh

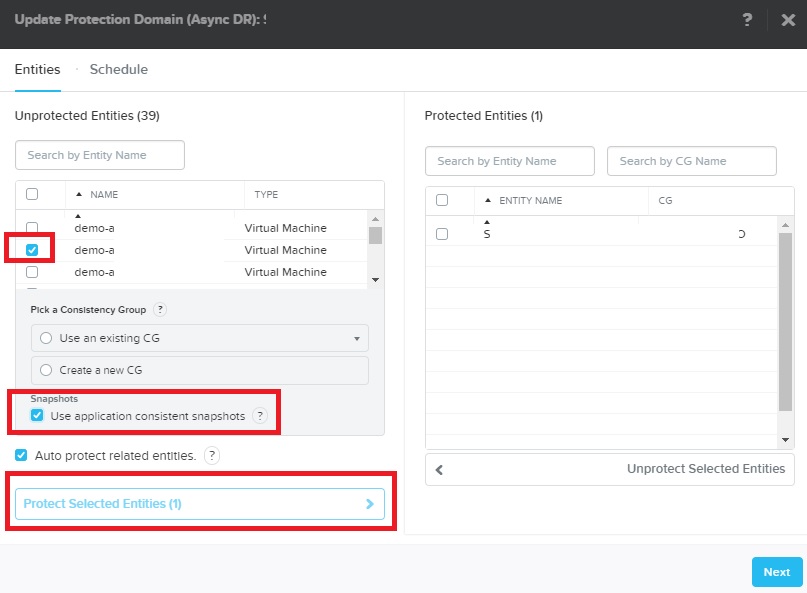

yourcommandTip: Don`t create the script with a Windows editor as the new line command is different. - Create a Nutanix AHV Protection Domain and add a VM to it with enabled “Use application consistent snapshots”.

It do not matter if you schedule the protection group or not.

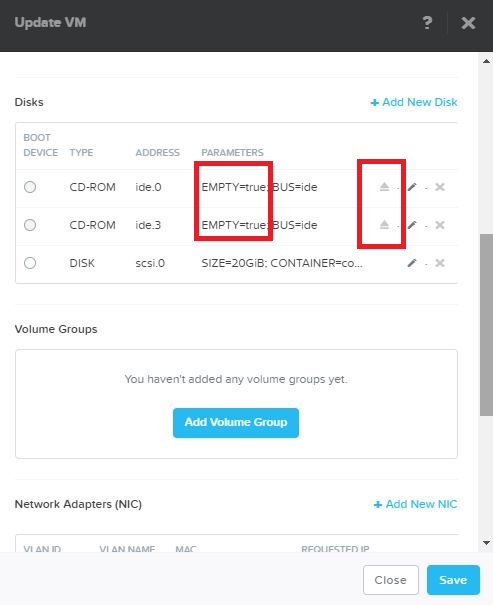

- Make sure there is no CD/DVD mounted/inside the drive. You can check the configuration of the VM:

8. Manually create a snapshot for the protection domain with enabled “Create application consistent snapshot” , schedule the protection domain or use Veeam Availability for Nutanix to backup the above created protection domain.

9. If you search for SAP HANA pre freeze post thaw scripts to bring the Database in a consistent state for backup, please check:

https://github.com/VeeamHub/applications/tree/master/sap-hana

Updated Exchange Tips and Tricks

Hi,

the Exchange Tips and Tricks post was updated again to cover the latest question from a customer that has reported that not all Logfiles are truncated at a DAG correctly,

Please find the update here:

https://andyandthevms.com/exchange-dag-vmware-backups-updated-list-of-tips-and-tricks-for-veeam-backup-replication/

And again an update of the Exchange Best Practices post

Hi together :-)

Updated again the Exchange best practices blog post and added some tips around vRDM and Exchange.

Exchange (DAG) VMware Backups: Updated list of tips and tricks for Veeam Backup & Replication

DNS Server repair for Windows VPN

It look like that Windows 10 gives VPN connections a higher or same priority as your standard network connections. This can end in the situation where you can not access your companies Server and Application anymore when you open a VPN connection. A good example is that you can not access DFS resources anymore. Root cause is a false DNS Server entry order because of wrong connection prioritization.

I created a small script that start a VPN connection and increase the VPN connection interface metric to a high value (lower priority), so that your other connections get priority when it comes to DNS Server entry selection.

Have fun.

#DNS Server order repair for Win VPN connection usage

#It start a VPN connection and change InterfaceMetric of this connection.

#V1.02

#Author: Andreas Neufert

#Website with most up to date version: andyandthevms.com

#################################

#Input

$vpnconnectionname = "Veeam VPN SPB"

#Start Powershell as Administrator (found at http://stackoverflow.com/questions/7690994/powershell-running-a-command-as-administrator)

if (!([Security.Principal.WindowsPrincipal][Security.Principal.WindowsIdentity]::GetCurrent()).IsInRole([Security.Principal.WindowsBuiltInRole] “Administrator”)) { Start-Process powershell.exe “-NoProfile -ExecutionPolicy Bypass -File `”$PSCommandPath`”” -Verb RunAs; exit }

#Start VPN Connection

rasdial $vpnconnectionname

#Lookup which DNS Server is used

write-host “DNS Server”

$pinfo = New-Object System.Diagnostics.ProcessStartInfo

$pinfo.FileName = “nslookup.exe”

$pinfo.RedirectStandardError = $true

$pinfo.RedirectStandardOutput = $true

$pinfo.UseShellExecute = $false

$pinfo.Arguments = “www.google.de”

$p = New-Object System.Diagnostics.Process

$p.StartInfo = $pinfo

$p.Start() | Out-Null

$p.WaitForExit()

$stdout = $p.StandardOutput.ReadToEnd()

$stderr = $p.StandardError.ReadToEnd()

#Write-Host “stdout: $stdout”

#select-string -Pattern “Address” -InputObject $stdout

#$c = $stdout.split(‘:’) | % {iex $_}

[string]$a = $stdout

[array]$b = $a -split [environment]::NewLine

$b[1]

write-host “=============================================================================================================”

#change the Interface Metric to a high number so that the other connections and their DNS settings become higher priority.

write-host “Changed to:”

Set-NetIPInterface -InterfaceAlias “Veeam VPN SPB” -InterfaceMetric 100

#output the new DNS Server address

$pinfo = New-Object System.Diagnostics.ProcessStartInfo

$pinfo.FileName = “nslookup.exe”

$pinfo.RedirectStandardError = $true

$pinfo.RedirectStandardOutput = $true

$pinfo.UseShellExecute = $false

$pinfo.Arguments = “www.google.de”

$p = New-Object System.Diagnostics.Process

$p.StartInfo = $pinfo

$p.Start() | Out-Null

$p.WaitForExit()

$stdout = $p.StandardOutput.ReadToEnd()

$stderr = $p.StandardError.ReadToEnd()

#Write-Host “stdout: $stdout”

#select-string -Pattern “Address” -InputObject $stdout

#$c = $stdout.split(‘:’) | % {iex $_}

[string]$a = $stdout

[array]$b = $a -split [environment]::NewLine

$b[1]

pause

Veeam Backup & Replication Best Practice Guide

In Version 8 the Veeam Solutions Architect Team released a new format of the Best Practice Guide.

You can find the most actual version under:

It will be updated for v9 soon.

And again another Exchange Blog Post Update…Update 16

Update: Proxy Backup Mode Best Practices

Hi,

on popular request, I update the Proxy Backup Mode best practices blog entry.

Prioritisation of Veeam Backup & Replication Proxy Modes from my field experience.

Link: Veeam Clickable Demo

If you ever wanted to test or demo Veeam Backup & Replication functionallity without installing the product, now you can do this here: http://veeam.foonet.be/

VM File Server with enabled dedup – High change rate every 28 days and how to avoid this

We see more and more customers enabling Windows deduplication within a VM to save space on the file servers. Even more with Windows 2016 this will become more and more the standard.

With deduplication enabled you will see a ~20x higher change rate every 28 days at the block level backups (e.g. Veeam). The root cause is the garbage collection run of the Windows deduplication engine.

You can find more informations here:

http://social.technet.microsoft.com/wiki/contents/articles/31178.deduplication-garbage-collection-overview.aspx

…and can discuss the solutions here:

https://forums.veeam.com/post193743.html#p193743

Exchange Blog Post update (again)

Hi,

again I updated my Exchange blog post and added more tips for sizing Exchange on virtual environments.

https://andyandthevms.com/exchange-dag-vmware-backups-updated-list-of-tips-and-tricks-for-veeam-backup-replication/

Update 1: 20.04.2016 … and again I updated it. This time to document some restore tips and tricks (Exchange+Veeam).

Exchange Blogpost updated

Hi,

just want to share with you that I updated my Exchange DAG + VMware + Veeam blog post, because of actual more frequent problems with 0x800423f VSS Logfile Truncation errors.

You can find the blog post here:

https://andyandthevms.com/exchange-dag-vmware-backups-updated-list-of-tips-and-tricks-for-veeam-backup-replication/

Windows 10 background and menue colors – We want the old menue options back!

Hi everybody,

if you are used to see a special color at menue bar and desktop background, you will be likely irritated by the reduced color selection options of Windows 10.

The following commands open the old menues where you can exactly set these colors the old way.

%windir%\system32\control.exe /name Microsoft.Personalization /page pageColorization

%windir%\system32\control.exe /name Microsoft.Personalization /page pageWallpaper

CU… Andy

SAP HANA Backup with Veeam

Hi,

my colleague and friend Tom Sightler created an toolset to backup SAP HANA with Veeam Backup & Replication. He documented everything in the Veeam Forum:

https://forums.veeam.com/veeam-backup-replication-f2/sap-b1-hana-support-t32514.html

Basically it follows the same way that storage systems like NetApp use for Backup of HANA. You implement in Veeam Pre and Post Scripts that makes HANA aware of the Veeam Backups. As well Logfile Handling is included (how many backup data do you want to keep on HANA system itself?).

In case of a DB restore, you go to HANA Studio and can access the backup data on HANA system directly. If you need older versions you can restore them with Veeam File Level Recovery Wizard or more comfortable with the Veeam Enterprise Manager File Restore (Self Services) and hit the rescann button at HANA Studio restore wizard. They are detected and you can proceed with the restore.

CU andy

Active Directory and Veeam

Hi,

in this post you will find more and more information`s of how to protect Active Directory with Veeam.

For the start let me share the following 3 things with you:

Veeams Userguide for Veeam Explorer for ActiveDirectory:

https://www.veeam.com/veeam_backup_9_0_explorers_user_guide_en_pg.pdf

To be able to restore a user account or machine account back to original place, you need a existing thumbstone in you AD for it.

A very good Thumbstone documentation including all kind of version/lifetime settings can be found in the following article.

Yes, it is in German, but you will get the point by just have a look at the lists and reg keys.

https://www.faq-o-matic.net/2006/07/28/das-geheimnis-der-tombstone-lifetime/ (German)

http://www.microsofttranslator.com/bv.aspx?from=de&to=en&a=http://www.faq-o-matic.net/2006/07/28/das-geheimnis-der-tombstone-lifetime/ (Google Translation)

When you install your first Active Directory Server it will as well create the recovery certificate for Windows EFS encryption. It is used for all domain members. It will be automatically placed on the c: drive. In any way protect this certificate with multiple backups. Without it you can not renew the certificate (default lifetime is 3 year) or restore EFS encrypted data. You can configure the certificate renew process by RSOP.msc and browse to Computer Configuration\Windows Settings\Security Settings\Public Key Policies\Encrypting File System

Veeam Availability Suite v9 Feature Videos

Veeam released a video overview of all features of his flagship product Availability Suite.

https://www.youtube.com/playlist?list=PL0afnnnx_OVCyyTnSrNQGKjUZH9h7tYpy

Fast-Restore und -Backup mit Veeam Backup & Replication und Zarafa

ESXi NTP Service not working?! (for Example with Windows NTP Server)

Hi,

sometimes ESXi NTP Service is a bit tricky. (Configuration see kb.vmware.com/kb/2012069)

When it do not update the time but all outputs show the correct NTP settings when you type in “watch ntpq” on ESXi console,

you can try to add the NTP Version to the /etc/ntp.conf .

Change

“Server <NTP name or IP>”

to

“Server <NTP name or IP> version 3”

Specifically with Windows NTP Server you had to add this option to it.

Yes it is written at http://kb.vmware.com/kb/1005092

but It is at the end of the document hidden and in most cases people do the first steps in the document before they read the whole document and waste time. And… I didn´t found this solution at Google.

Update: There is as well a good KB that describe the Windows NTP + VMware ESXi configuration: http://kb.vmware.com/kb/1035833

Interview with Anton Gostev about “Agentless” Backup

Hi everybody,

as you might know Veeam do not install backup agents on the VMs to process application aware and application- and filesystem consistent backups. Veeam looks into the VM and it´s applications and register plus start an according run time environment that allow application aware backups.

We had lately an internal discussion about this topic and Anton Gostev Vice President of Product Management at Veeam Software allowed me to share his thoughts and ideas behind Veeam’s unique approach.

Andreas Neufert: “Let´s talk first about the definition of Agents. According to http://en.wikipedia.org/wiki/S

Anton Gostev: “All problems which cause issue known as “agent management hell” are brought by the persistency requirement

…(of that Agents from other solutions)…

– Need to constantly deploy agents to newly appearing VMs

– Need to update agents on all VMs

– Need to babysit agents on all VMs to ensure reliability (make sure it behaves correctly in the long run – memory leaks, conflicts with our software etc.)

Auto-injected temporary process addresses all of these issue, and the server stay clean of 3rd party code 99.9% of time.”

Andreas Neufert: “I think we all were at the point where we need to install a security patch in our application and have to wait till the backup vendor released a compatible backup agent version. Or I can remember that we have to boot all Servers because of a new version of such an agent (before I joined Veeam). But what happens if the Application Server/VM is down?”

Anton Gostev: “… Our architecture address the following two issues …

– Persistent agent (or in-guest process) requires VM from running at the time of backup in order to function. But no VMs are running 100% of time – some can be shutdown! We are equally impacted, however the major difference is that we do not REQUIRE that in-guest process was operating at the time of backup (all item-level recoveries are still possible, they just require a few extra steps). This is NOT the case with legacy agent-based architectures: shutdown VM means no item-level recoveries from the corresponding restore point.

– Legacy agent-based architectures require network connectivity from backup server to guest OS – rarely available, especially in secure or public cloud environments. We are not impacted, because we can failover to network-less interactions for our in-guest process. This is NOT the case with legacy agent-based architectures: for them it means no application-aware backup, and no item-level recoveries from the corresponding restore point.

Andreas Neufert: “Everyone who operate a DMZ knows the problem. You isolated the whole DMZ from your normal internal network, but the VMs need a network connection to the backup server which hold as well data from other systems. So the Veeam approach can bring additional security to the DMZ environment. Thank you Anton!”

Thanks for reading. Please send me comments if you want more interviews on this blog.

Cheers… Andy

vCenter connection limitation and backup in big environments

Hi Team,

Update from 2019-05-20: Since some years the below SOAP modifications within vCenter are not needed anymore as Veeam caches all needed vCenter information in RAM which reduced the vCenter connection count drastically at the backup window. See Broker Service note here: https://helpcenter.veeam.com/docs/backup/vsphere/backup_server.html?ver=95u4

My friend and workmate Pascal Di Marco ran into some VMware connection limitation while backing up 4000VMs in a very short backup window.

If you ran a lot of parallel backup jobs that use the VMware VADP backup API you can run into 2 connection limitations… on vCenter SOAP connections and on some limitation on NFC buffer size on ESXi side.

All backup vendors that use VMware VADP implement in their product the VMware VDDK kit which help the backup vendor with some standard API calls and it also helps to read and write data. So all backup vendors have to deal with the VDDK own vCenter and ESXi connection count in addition to their own connections. VDDK connections vary from VDDK version to version.

So if you try to backup thousands of VMs in a very short time frames you can hit these limitations.

In case you hit that limitation, you can increase the vCenter SOAP connection limitation from 500 to 1000 by this VMware KB 2004663 http://kb.vmware.com/kb/2004663

EDIT: In vCenter Server 6.0, vpxd.cfg file is located at C:\ProgramData\VMware\vCenterServer\cfg\vmware-vpx

As well you can optimze the ESXI Network (NBD) performance by increasing the NFC buffer size from 16384 to 32768 MB and optimize the Cache Flush interval from 30s to 20s by VMware KB 2052302 http://kb.vmware.com/kb/2052302